If you’re optimizing for AI search without understanding query fan-out, you’re fighting blind. Here’s everything marketers need to know about how modern search engines actually retrieve and rank content in 2025.

We’ve spent the last eight months analyzing millions of AI search results across ChatGPT, Google’s AI Mode, Perplexity, and Gemini. The single most important discovery? Query fan-out determines who shows up in AI answers and who doesn’t; and most marketing teams still don’t understand how it works.

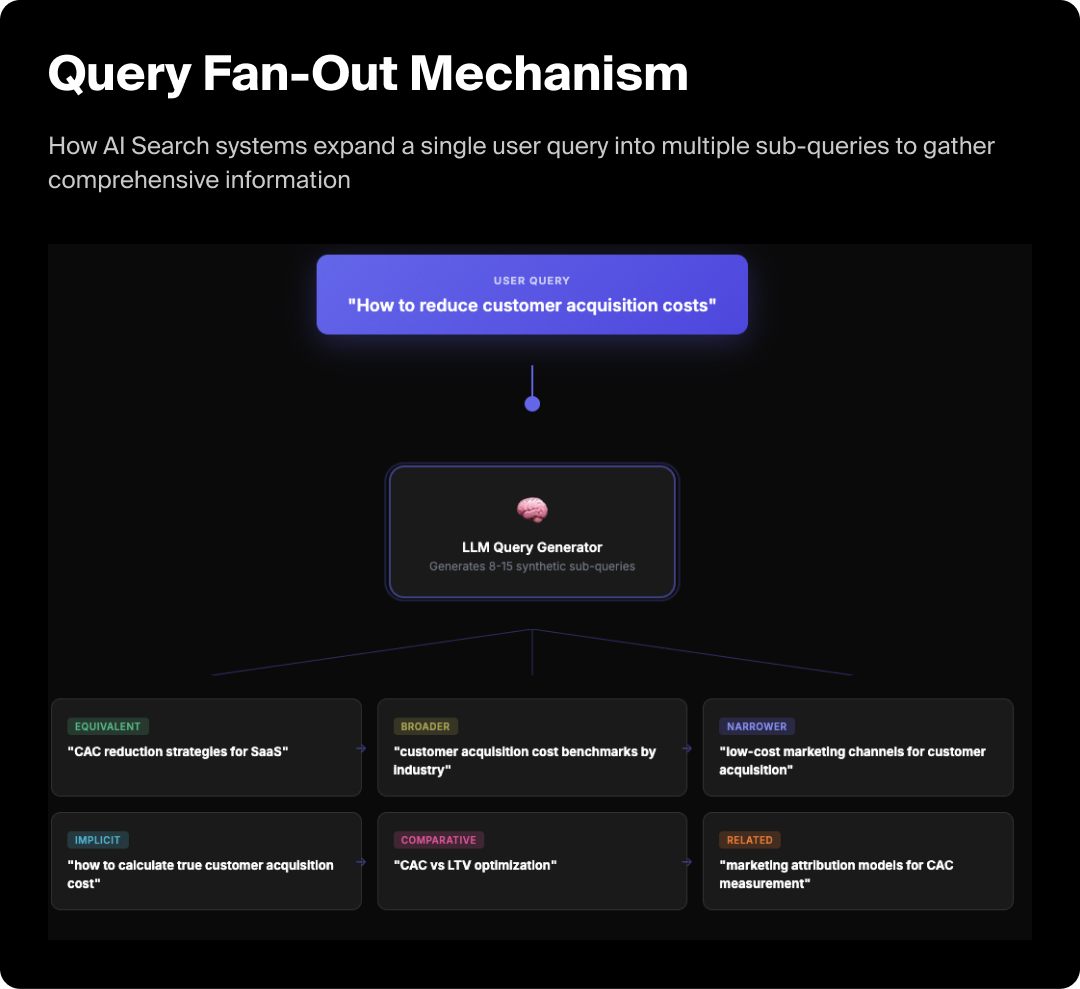

Let’s get into it: when someone asks ChatGPT, “what’s the best marketing automation platform for B2B SaaS companies?” the system doesn’t just search for that phrase. It generates 8-15 sub-queries behind the scenes, retrieves hundreds of sources, selects specific passages from each, and synthesizes them into a single answer.

Your brand’s visibility depends entirely on whether you have the best passage for any of those synthetic queries; queries you’ve never seen and can’t predict with traditional keyword research.

This is query fan-out in action, and it’s fundamentally changing how search works.

What Is Query Fan-Out?

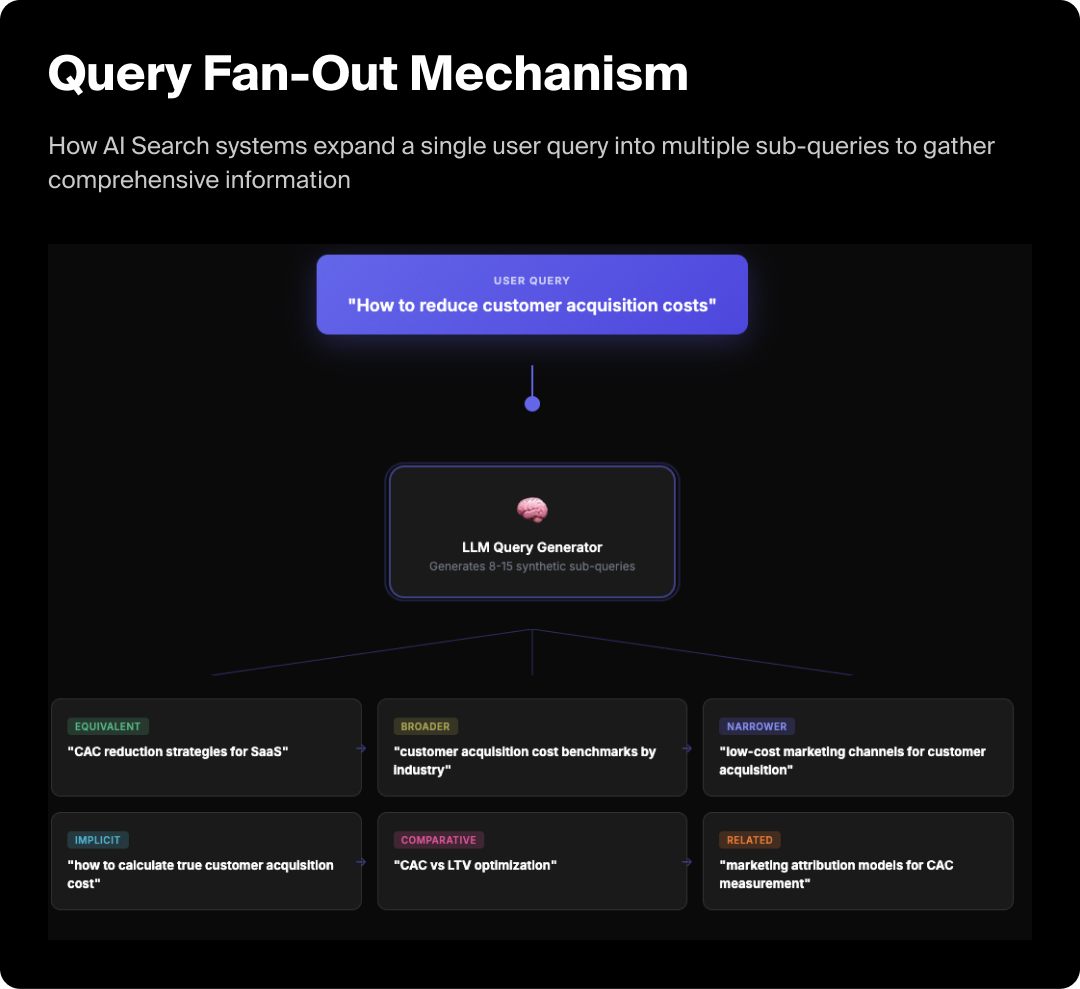

Query fan-out is an information retrieval technique where AI search systems expand a single user query into multiple sub-queries, executing them in parallel to gather comprehensive information before generating a response.

Think of it this way: traditional search takes one question and returns one ranked list of pages. Query fan-out takes one question, breaks it into 5-20 related questions, searches for all of them simultaneously, then weaves the best answers together.

Here’s a real example from our testing at NoGood:

User query: “how to reduce customer acquisition costs”

Fan-out queries ChatGPT generated:

- “CAC reduction strategies for SaaS”

- “customer acquisition cost benchmarks by industry”

- “low-cost marketing channels for customer acquisition”

- “how to calculate true customer acquisition cost”

- “CAC vs LTV optimization”

- “marketing attribution models for CAC measurement”

Each of these sub-queries retrieved different sources. The final answer combined passages from six different websites, none of which ranked #1 for the original query.

This pattern repeats across every major AI search platform. Google’s AI Mode explicitly states in their documentation that it uses query fan-out to “capture different possible user intents, retrieving more diverse, broader results from different sources.” ChatGPT, Perplexity, and Microsoft Copilot all use similar architectures built on Retrieval-Augmented Generation (RAG).

Why Query Fan-Out Matters More Than Rankings

The shift from single-query to multi-query retrieval breaks every assumption that traditional SEO is built on:

- In traditional search, visibility is binary: either you rank on Page One for a keyword, or you don’t.

- In AI search, visibility is probabilistic: you might be retrieved for dozens of synthetic queries, but only cited for a few. Or, you might not rank well for the primary keyword, but dominate the sub-queries that actually determine citation inclusion.

We’ve seen this play out repeatedly with NoGood clients.

We track a SaaS company that ranks #8 for “project management software,” but appears in 67% of AI-generated answers about project management. How? Their content addresses the full constellation of sub-queries (pricing comparisons, integration capabilities, use case breakdowns, team size recommendations) that AI systems generate when researching that category.

Meanwhile, a competitor ranking #2 for the head term appears in just 12% of answers. They optimized for rankings, not for topical coverage.

The data backs this up. Mike King’s analysis of Google’s AI Mode shows that citation selection happens at the passage level, not page level: a single paragraph from a lower-ranking page can beat a comprehensive guide if that paragraph better answers a specific sub-query.

How Query Fan-Out Actually Works: The Technical Architecture

Understanding the mechanics helps you know where optimization actually matters.

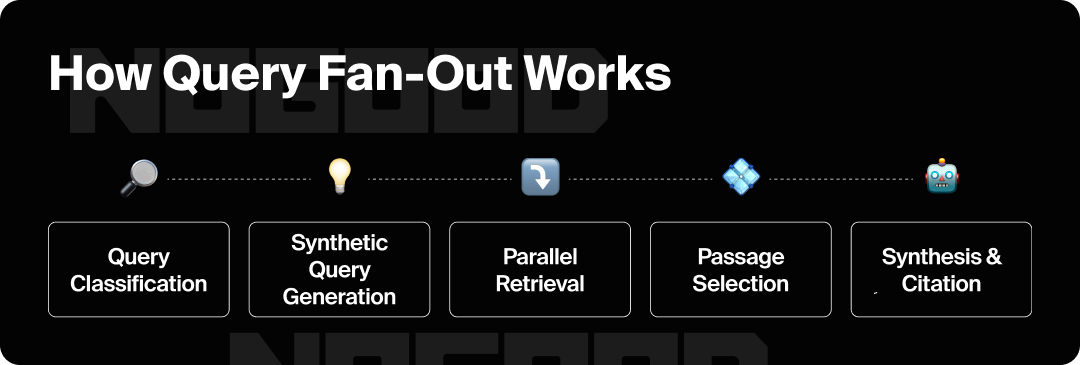

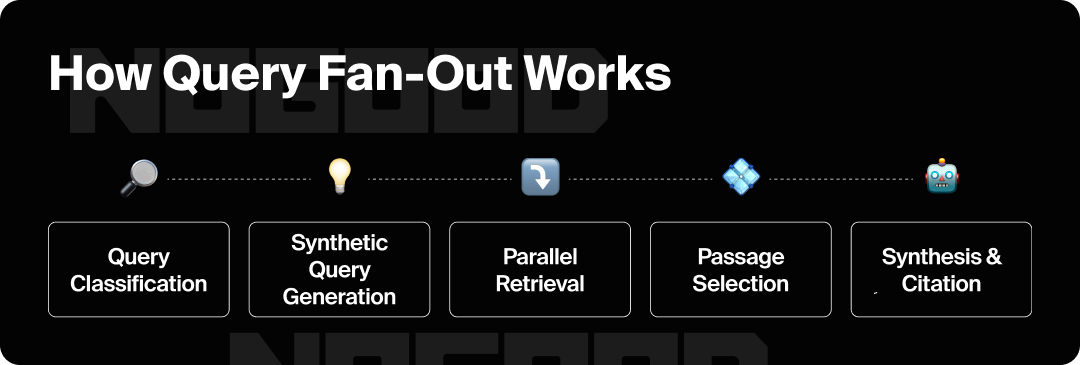

Query fan-out sits within the RAG pipeline, the standard architecture for how AI systems ground their responses in web data. Here’s the step-by-step process:

Step 1: Query Classification

When you submit a prompt, the system analyzes:

- Complexity: Simple factual queries (“what is SEO?”) might not trigger fan-out. Complex, exploratory, or comparative queries do.

- Recency Needs: Questions about “latest,” “current,” or time-sensitive topics trigger web search.

- Confidence Score: If the model’s training data can’t answer reliably, it searches the web.

💡 Pro Tip: For Gemini models, you can actually configure this threshold via the API’s dynamicThreshold parameter.

Step 2: Synthetic Query Generation

An LLM generates multiple sub-queries using structured prompting. Google’s patent (US20240289407A1) reveals that the system can produce eight distinct query types:

- Equivalent queries (alternative phrasings)

- Broader queries (higher-level concepts)

- Narrower queries (more specific angles)

- Related queries (semantically adjacent topics)

- Comparative queries (X vs. Y)

- Personalized queries (tailored to user context)

- Implicit queries (unstated but likely needs)

- Parallel queries (sibling topics)

The model doesn’t randomly generate these. It follows specific prompts like “generate comparative variations of this query” or “what implicit questions might this user have?”

Step 3: Parallel Retrieval

All synthetic queries execute simultaneously. For ChatGPT, this means hitting Bing’s API multiple times in parallel. For Google AI Mode, it’s Google’s own infrastructure plus specialized databases (Shopping Graph, Knowledge Graph, Finance, etc.).

This parallel execution is critical. Running 15 queries sequentially would take 15x longer. Parallel execution takes roughly the same time as a single query.

Step 4: Passage Selection

Retrieved documents get chunked into semantic passages (typically 200-500 tokens each). Each passage is evaluated independently using multiple signals:

- Reciprocal Rank Fusion: Passages appearing in results for multiple sub-queries get boosted. If a chunk ranks well for both “marketing automation pricing” and “HubSpot vs. Marketo cost,” it scores higher than content ranking for just one.

- Pairwise LLM Ranking: The model compares passages head-to-head: “which better answers this question: A or B?” This happens across many pairs to build the final ranking.

- Freshness Scoring: Newer content gets preference for time-sensitive queries. Ahrefs’ analysis shows that AI systems cite content averaging 25.7% fresher than traditional search results.

Step 5: Synthesis & Citation

The model generates the final answer, citing sources that support specific claims. Importantly, not all retrieved content gets cited; only passages that make it through the selection filters.

How to See Query Fan-Out Data Yourself

The breakthrough for practitioners came when we discovered that we could actually access the synthetic queries AI systems generate. Here are the three primary methods:

Method 1: ChatGPT via Chrome DevTools

ChatGPT exposes fan-out queries in its network requests.

- Open ChatGPT and Chrome DevTools (right-click → Inspect)

- Go to the Network tab

- Submit a query that triggers web search

- In the Network panel, find fetch/XHR requests

- Click on a request, and go to the Response tab

- Search (Ctrl+F) for search_model_queries

You’ll see JSON containing the actual queries that ChatGPT sent to Bing.

There are also several Chrome extensions available that automate this process:

Method 2: Gemini Grounding API

Google provides official access through the Gemini API. When you enable grounding, the response includes a groundingMetadata object with a webSearchQueries array showing exactly what Google searched for.

This is the most authoritative data for optimizing for Google AI Overviews and AI Mode, since it’s first-party data directly from Google’s systems.

Method 3: Purpose-Built Tools

Several specialized tools now exist:

- Qforia (by Mike King and iPullRank): Free tool that uses Gemini to simulate fan-out for both AI Overviews and AI Mode. It shows query types, user intent, and recommended content formats.

- DEJAN’s Query Fan-Out Generator: One of the earliest tools, trained on Google’s patent patterns. Includes search volume predictions.

- Profound’s Query Fanouts: Part of their AEO platform, automatically captures real fan-outs from millions of daily prompts across multiple AI engines.

- Goodie’s Optimization Hub

The Biggest Mistake: Chasing Individual Fan-Out Queries

Here’s where most teams go wrong.

You discover that ChatGPT generates queries like “marketing automation for e-commerce stores” and “email marketing software with SMS integration.” The temptation is, of course, to create separate pages for each.

This is the hyper long-tail trap (and spoiler alert: it’s a massive waste of resources).

Why? Fan-out queries are inherently unstable and personalized.

Surfer’s research found that only 27% of fan-out queries remain consistent across multiple runs of the same prompt. 66% of fan-outs appear only once across ten test runs. The queries generated for you testing a prompt are different from what real users trigger based on their location, search history, device, and conversation context.

You can’t optimize for targets that shift every time the model runs.

The Right Strategy: Topic Cluster Aggregation

Instead of chasing individual queries, aggregate fan-out data to identify persistent topical themes.

Here’s NoGood’s framework:

Step 1: Generate Fan-Outs at Scale

Run fan-out analysis for 20-30 related prompts in your category. Test each prompt 3-5 times using multiple tools. For “marketing automation,” we’d test:

- “best marketing automation software”

- “marketing automation for small business”

- “HubSpot vs Marketo vs Pardot”

- “how to choose marketing automation platform”

- “marketing automation pricing comparison”

Step 2: Identify Recurring Patterns

Cluster fan-outs by theme. Look for:

- Which entities appear repeatedly (HubSpot, Marketo, ActiveCampaign)

- Which attributes get mentioned (pricing, integrations, ease of use)

- Which comparisons emerge (Platform A vs. Platform B)

- Which use cases surface (eCommerce, B2B SaaS, agencies)

Step 3: Build Content Architecture Around Themes

Create a content structure that addresses all major themes:

- Pillar Content: Comprehensive guides covering the full topic

- Entity Pages: Deep dives on specific products and / or companies

- Attribute pages: Dedicated coverage of key features and capabilities

- Comparison Pages: Side-by-side evaluations

- Use Case Pages: Industry or scenario-specific guides

This ensures that you’re discoverable, regardless of which specific fan-out gets generated.

How to Optimize Content for Query Fan-Out

AI systems select content at the passage level, not page level. This requires a different optimization approach.

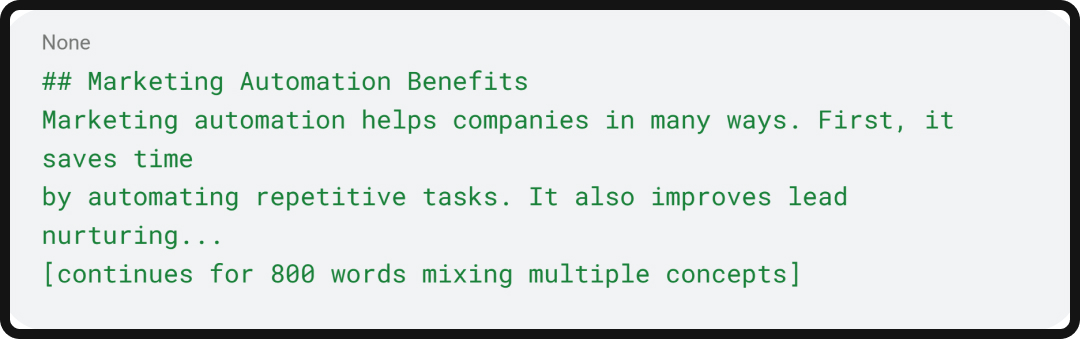

Create Modular, Self-Contained Sections

Each section should independently answer a specific question. Use clear H2s and H3s that match natural language queries.

Wrong approach:

Right approach:

Each section can be extracted and cited independently.

Use Structured Formats

AI systems preferentially select certain content types:

- Tables for comparisons and specifications

- Bulleted lists for features or steps

- FAQ sections with question-answer pairs

- How-to schemas for procedural content

- Comparison schemas for product evaluations

These formats are easier for AI systems to parse and extract.

Address Entity-Attribute Coverage

Fan-out analysis reveals which entities (products, companies, concepts) and attributes (features, specs, prices) matter for your topic.

Build a coverage matrix:

|

HubSpot |

✓ |

✓ |

✓ |

✓ |

|

Marketo |

✓ |

✓ |

? |

✓ |

|

Pardot |

✓ |

? |

✓ |

? |

Gaps represent optimization opportunities. Fill them systematically to ensure comprehensive coverage.

Maintain Freshness

AI systems heavily weight recency for time-sensitive topics. We’ve found that content updated within the last 90 days gets cited 2.3x more often than content older than 12 months for dynamic categories.

Set up quarterly refresh cycles for core content, updating:

- Product information and pricing

- Statistics and data points

- Examples and case studies

- Screenshots and visuals

How to Measure Success in AI Search

Traditional SEO KPIs don’t work for query fan-out optimization. Here are your updated AEO / AI Search KPIs:

- Citation Rate: Percentage of relevant prompts where your content appears in AI answers. Target 15-25% for your category.

- Share of Citations: Your citations divided by total citations in the answer. Aim for 20%+ share in core categories.

- Citation Position: Where your source appears in the citation list. Earlier = better visibility and credibility.

- Topic Coverage Score: Percentage of identified themes your content addresses. Calculate by identifying 20-30 core themes from fan-out analysis, then auditing your content coverage. Target 80%+ coverage.

Tools like Goodie, Profound, BrightEdge, and custom monitoring setups can track these metrics across multiple AI platforms.

Query Fan-Out: FAQs

Does query fan-out replace traditional SEO?

No. Query fan-out is additive to existing SEO best practices. Strong traditional SEO (technical health, quality content, authoritative backlinks) remains the foundation. Fan-out optimization is about structuring that content to perform well in AI retrieval systems.

How often do fan-out queries change?

They’re probabilistic, so the same prompt generates different queries each time. However, the underlying themes remain relatively stable. Focus on themes, not individual queries.

Can I see which fan-outs drove traffic to my site?

Not currently. Google Search Console and Google Analytics don’t break out AI Mode or AI Overview traffic separately, and they don’t show synthetic queries. This is one of the biggest measurement challenges in AI search optimization.

Do all queries trigger fan-out?

No. Simple factual queries often don’t need it. Fan-out typically triggers for complex, exploratory, comparative, or time-sensitive queries where comprehensive information improves the answer.

Is query fan-out the same across all AI platforms?

The concept is the same, but implementation varies. ChatGPT uses Bing for retrieval, Google AI Mode uses Google’s infrastructure, Perplexity uses multiple search providers. The specific queries generated differ by platform.

Looking Forward: The Future of Query Fan-Out

Query fan-out is evolving rapidly. Here’s what we’re seeing:

- Deeper Personalization: Fan-outs increasingly incorporate user history, preferences, location, and conversation context. The same prompt generates different queries for different users.

- Multi-Modal Expansion: Future systems will fan out across text, images, video, and structured data simultaneously, creating richer information sets.

- Longer Reasoning Chains: Advanced features like ChatGPT’s Deep Research and Gemini’s Deep Research can generate hundreds of queries for complex questions, creating extensive retrieval networks.

- Agentic Workflows: AI agents will use fan-out to plan multi-step tasks, not just answer questions. This creates new optimization opportunities around task-based content.

The fundamental shift is this: search is no longer deterministic. You can’t control exactly what queries get generated or which passages get selected. You can only increase your probability of appearing by building comprehensive, well-structured content that addresses the full topical landscape.

Query fan-out gives you a window into how AI systems think about topics. Use that window wisely. Build content that consistently appears across thousands of possible retrieval paths, not content optimized for a single keyword.

The brands that understand this (aka, the ones that treat AI search as a probabilistic system requiring topical authority rather than a deterministic system requiring keyword optimization) will dominate AI search for the next decade.

We’re still in the early innings of this transformation. The tools will get better, the data will become more accessible, and best practices will continue to evolve. But the core principle remains: comprehensive topical coverage beats keyword optimization in a query fan-out world.

At NoGood, we’re actively testing and refining these approaches with our clients. The results speak for themselves: brands implementing topic cluster strategies based on fan-out analysis are seeing 40-85% increases in AI citations within 90 days, even as traditional SEO remains flat.

The search landscape has fundamentally changed. Query fan-out is the mechanism driving that change. Understanding it isn’t optional anymore; it’s the baseline requirement for visibility in modern search.